Live migration is a important part of kvm virtualization at the first day it was designed. However when dive into control plane of libvirt live migration, it became quite complex. So I will describe the basic implementation about it at the early stage.

Libvirt + QEMU basic building blocks

For KVM based virtualization software, normally use libvirt + QEMU to manage guest’s lifecycle. And for live migration we have to know some basic part between libvirt and QEMU.

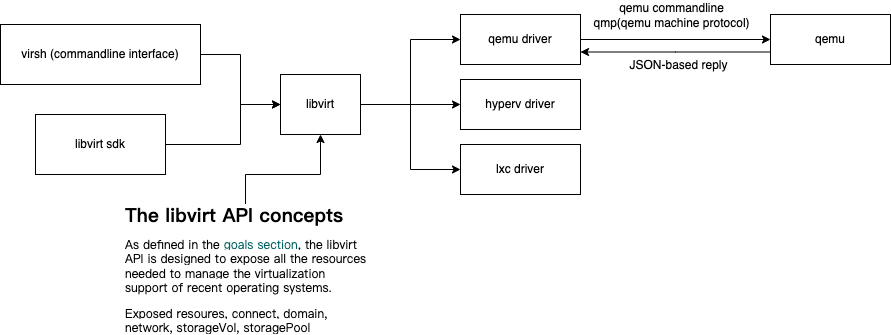

the figure below introduces the basic parts and I just list those parts from left to right:

- virsh: a commandline interface to management domains

- libvirt sdk: Python, Go… supported sdk to access libvirt by defined api

- Libvirt api: exposed connect (the connection to libvirt), domain (guest), network (virtualization network of a hypervisor), storage volume (storage volume as block device which can be used by domain), storage pool (logically used for allocate and store storage volumes)

- QEMU driver: libvirt driver of qemu, it will translate libvirt api invoke to related qemu operations

- QEMU: a generic and open source machine emulator and virtualizer

- qmp: QEMU machine protocol, is a JSON-based protocol, which allows applications to control a QEMU instance

So when we do a live migration operation all those parts will be involved.

Libvirt live migration

For the control plane (libvirt), many concepts need to be introduced before we try to comprehensive its migration logic.

According to https://libvirt.org/migration.html there are two options for network data transport.

- Native transport: use qemu socket to transport data

- Require network between hypervisor (firewall issue should be solved)

- Encryption support is depend on hypervisor

- Better performance (minimising the number of data copies)

- Tunnelled transport: the data will be transported through libvirt RPC protocol

- Encryption supported

- Less firewall issues

- Worst performance (due to encryption)

And libvirt also support different control plane, the migration support have common features

- a peer2peer flag decide if we use client to connect to libvirtd servers or libvirtd server manage the connection itself

- A destination URI with a form like ‘qemu+ssh://desthost/system’ for libvirtd connection

- Data transport URI need a optional URI like ‘tcp://10.0.0.1/‘ means use TCP for data transport to hypervisor or libvirtd server

- Normally libvirtd on target will automatically determine its native hypervisor URI so is not required in migratin api

- If hypervisor do not offer encryption itself, tunnelled migration should be used

- When libvirt daemon can not access network use unix migration

- For vm with disks on non-shared storage, remember copy all storages

Following are libvirt supported migrations and all available for qemu driver:

- Native migration, client to two libvirtd servers

- Native migration, client to and peer2peer between two libvirtd servers

- Tunnelled migration, client and peer2peer between two libvirtd servers

- Native migration, client to one libvirtd server

- Native migration, peer2peer between two libvirtd servers

- Tunnelled migration, peer2peer between two libvirtd servers

- Migration using UNIX sockets

- Migration of VMs using non-shared images for disks

Libvirt keepalive of client

Libvirt use a C/S architecture and during migration libvirt need to support ‘client to two libvirtd servers’ or ‘client to and peer2peer between two libvirtd servers’.

So connection management between client and server or server and server is important for libvirt. And some common conserns for this architecture:

- Client and server connection

- Async task not relay on the connection if server implement idempotency

- Domain object lock help with idempotency

- Sync task relay on the connection

- All sync tasks should fail if connection keepalive timeout

- Async task not relay on the connection if server implement idempotency

- Server and server connection

- Source server should be treated as client and same with client and server connection

In order to solve basic requirements, libvirt introduced keepalive for client connection. Client can set a keepalive timeout with interval and count (server should support this because a keepalive response is required).

Note:

- Default settings is configured from libvirtd.conf

- If set keepalive timeout to 0 means disable keepalive for client

The code from src/rpc/virkeepalive.h is quite easy:

1 | virKeepAlivePtr virKeepAliveNew(int interval, |

For libvirt keepalive timeout issue

The result from https://bugzilla.redhat.com/show_bug.cgi?id=1367620 butzilla explains a issue of live migration failure due to poor network and the connection between libvirtd servers down which will report a keepalive timeout error.

In libvirtd log (https://libvirt.org/kbase/debuglogs.html) we could see:

1 | 2023-01-05 05:07:36.721+0000: 114785: info : virKeepAliveTimerInternal:131 : RPC_KEEPALIVE_TIMEOUT: ka=0x7f6af4006c60 client=0x7f6af400 |

And search for client=0x7f6af400 we can find it is a connection created during migration:

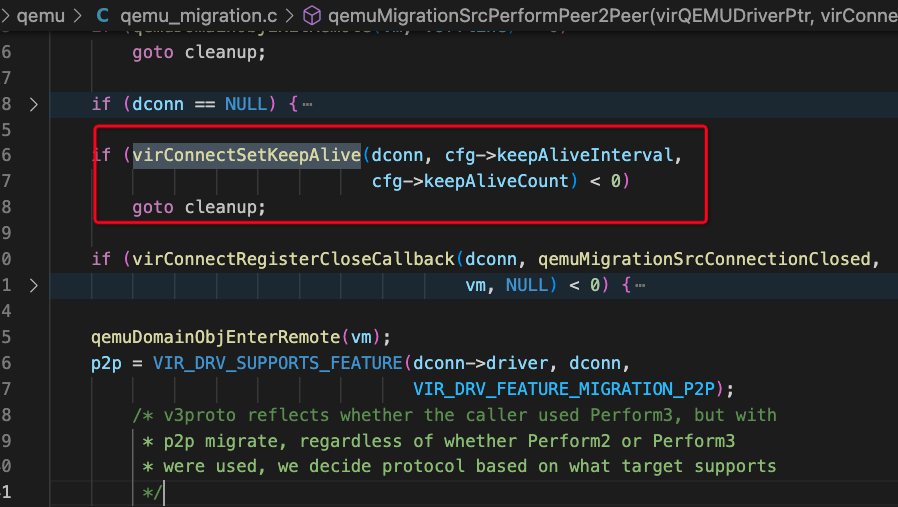

the dconn is the URI to destination libvirtd server.

For peer2peer live migration, this issue can be workaround by using seperate network for libvirtd connection and data transport.