See notation of virt/kvm/kvm_main.c in linux kernel

1 | /* |

I got some questions

- what means kernel-based

- what is VT-x

- emulation? binary traslation?

- who is Avi Kivity

- is there any user-mode hypervisor?

Kernel-based

Kernel-based Virtual Machine (KVM) is a virtualization module in the Linux kernel that allows the kernel to function as a hypervisor. It was merged into the mainline Linux kernel in version 2.6.20, which was released on February 5, 2007. [1]

its available under linux/virt

VT-x

1 | This module enables machines with Intel VT-x extensions to run virtual |

According to the code notation, Intel VT-x extensions is metioned.

Previously codenamed “Vanderpool”, VT-x represents Intel’s technology for virtualization on the x86 platform. On November 13, 2005, Intel released two models of Pentium 4 (Model 662 and 672) as the first Intel processors to support VT-x. The CPU flag for VT-x capability is “vmx”; in Linux, this can be checked via /proc/cpuinfo, or in macOS via sysctl machdep.cpu.features.[2]

for example, on centos 7.6

1 | [root@test ~]# cat /proc/cpuinfo | grep vmx | head -1 |

or on Intel CPU MacBook Pro (2020)

1 | ➜ ~ sysctl machdep.cpu.features | grep -i vmx |

vmx is available

“VMX” stands for Virtual Machine Extensions, which adds 13 new instructions: VMPTRLD, VMPTRST, VMCLEAR, VMREAD, VMWRITE, VMCALL, VMLAUNCH, VMRESUME, VMXOFF, VMXON, INVEPT, INVVPID, and VMFUNC.[21] These instructions permit entering and exiting a virtual execution mode where the guest OS perceives itself as running with full privilege (ring 0), but the host OS remains protected.[2]

note: virtual execution mode is a important concept

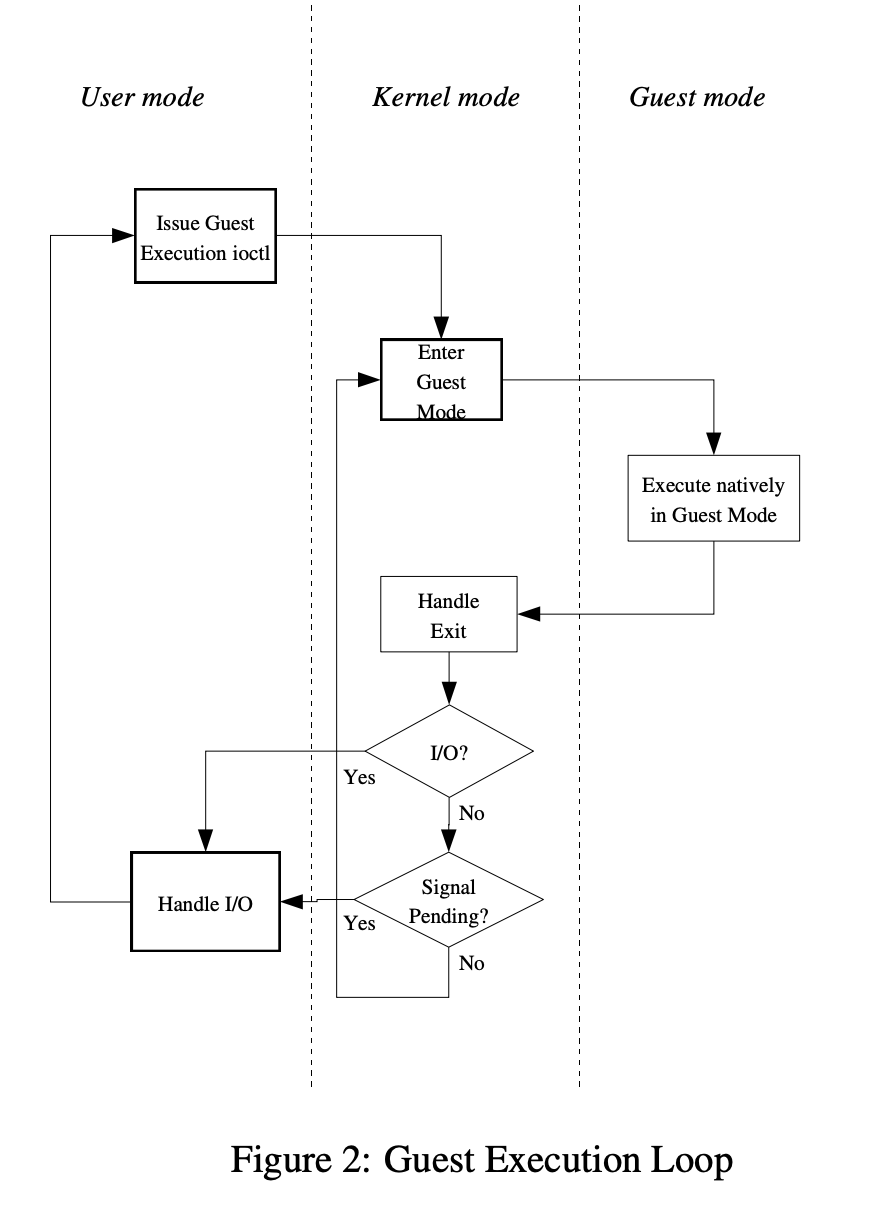

refer to paper kvm: the Linux Virtual Machine Monitor KVM is designed to add a guest mode, joining the existing kernel mode and user mode

In guest-mode CPU instruction executed natively but when I/O requests or signal(typically, network packets received or timeout), exit guest-mode is required and kvm than redirect those I/O or signal handling to user-mode process to emulation device and execute actual I/O. After I/O handling finished, KVM will enter guest mode to execute its CPU instructions again.

For kernel-mode handling exit and enter is basic task. And user-mode process calls kernel to enter guest-mode until it interrupt.

Emulation & Binary translation

In computing, binary translation is a form of binary recompilation where sequences of instructions are translated from a source instruction set to the target instruction set. In some cases such as instruction set simulation, the target instruction set may be the same as the source instruction set, providing testing and debugging features such as instruction trace, conditional breakpoints and hot spot detection.

The two main types are static and dynamic binary translation. Translation can be done in hardware (for example, by circuits in a CPU) or in software (e.g. run-time engines, static recompiler, emulators).[3]

Emulators mostly used to run softwares or applications on current OS where those softwares or applications are not support. For example, https://github.com/OpenEmu/OpenEmu a multiple video game system. This is advantage of emulators.

Disadvantage is that binary translation sometimes require instructino scan, if its used for CPU instruction translations, it spends more time than native instruction. More details in Translator-Internals will be talked in next blogs.

Avi Kivity

Mad C++ developer, proud grandfather of KVM. Now working on @ScyllaDB, an open source drop-in replacement for Cassandra that’s 10X faster. Hiring (remotes too).

from https://twitter.com/avikivity

Avi Kivity began the development of KVM in mid-2006 at Qumranet, a technology startup company that was acquired by Red Hat in 2008. KVM surfaced in October, 2006 and was merged into the Linux kernel mainline in kernel version 2.6.20, which was released on 5 February 2007.

KVM is maintained by Paolo Bonzini. [1]

Virtualization

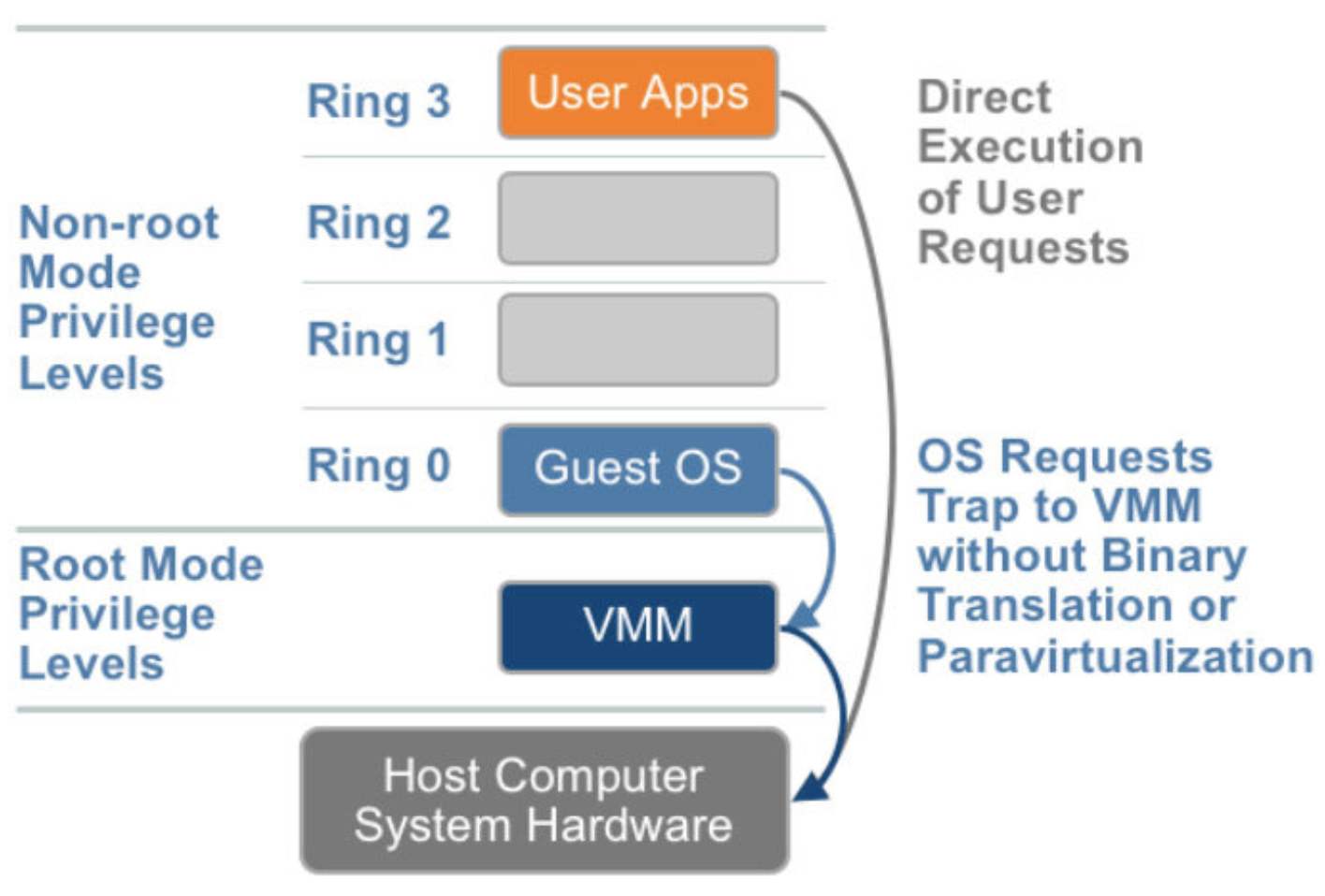

For hardware assisted virtualiztion, VMM running below ring0 Guest OS, user application can directly execute user requests, and sensitive OS call trap to VMM without binary translation or Paravirtualization so overhead is decreased.

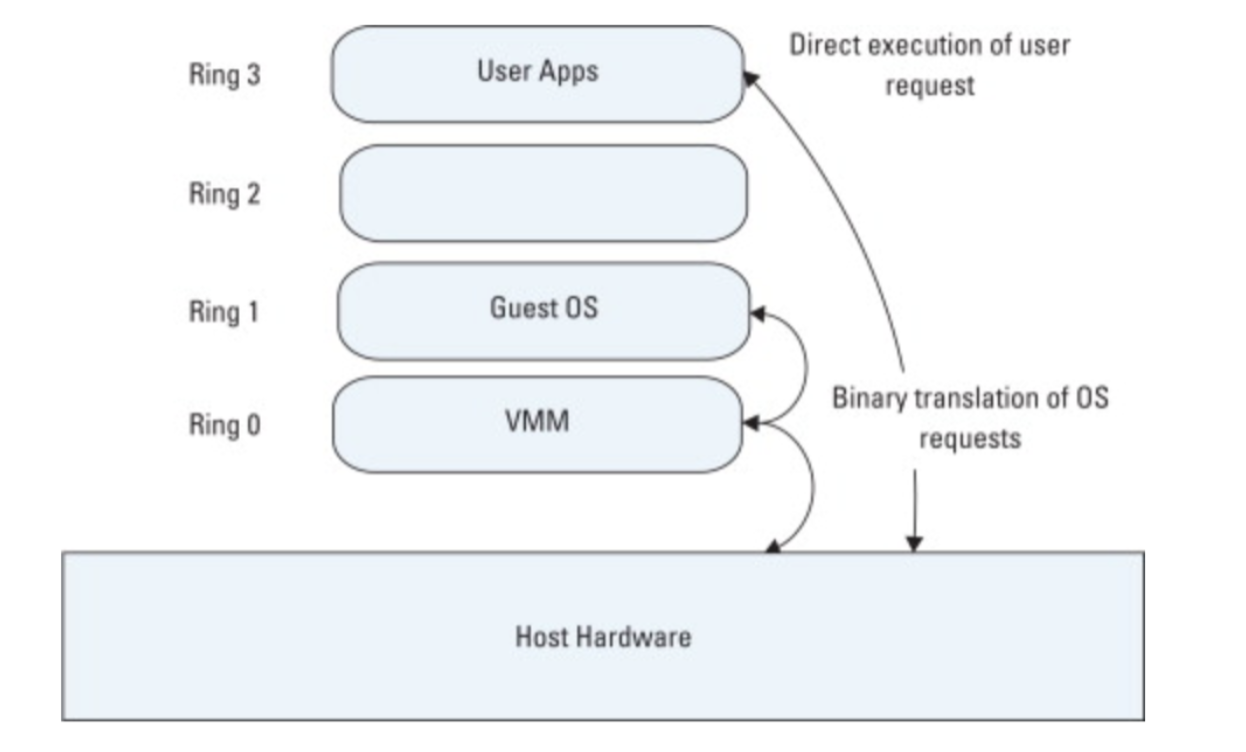

But for older full virtualization design

Guest OS runs on Ring1 and VMM runs on Ring0, without hardware assist, OS requests trap to VMM and after binary translation the instruction finally executed.

KVM Details

Memory map

From perspective of Linux guest OS. Physical memory is already prepared and virtual memory is allocated depend on the physical memory. When guest OS require a virtual address(GVA), Guest OS need to translate is to guest physical address(GPA), this obey the prinsiple of Linux, and tlb, page cache will be involved. And no difference with a Linux Guest running on a real server.

From perspective of host, start a Guest need to allocate a memory space as GPA space. So every GPA has a mapped host virtual address(HVA) and also a host physical address(HPA)

So typically, if a guest need to access a virtual memory address

GVA -> GPA -> HVA -> GPA

at least three times of translation is needed.

Nowadays, CPU offer EPT(Intel) or NPT(AMD) to accelerate GPA -> HVA translation. We will refer that in after blogs.

vMMU

MMU consists of

- A radix tree ,the page table, encoding the virtual- to-physical translation. This tree is provided by system software on physical memory, but is rooted in a hardware register (the cr3 register)

- A mechanism to notify system software of missing translations (page faults)

- An on-chip cache(the translation lookaside buffer, or tlb) that accelerates lookups of the page table

- Instructions for switching the translation root inorder to provide independent address spaces

- Instructions for managing the tlb

As referred in Memory map GPA -> HVA should be offered by KVM.

If no hardware assist, use shadow table to maintain the map between GPA and HVA, the good point of shadow table is that runtime address translation overhead is decrease but the major problem is how to synchronize guest page table with shadow page table, when guest writes page table, the shadow page table need to be changed together, so virtual MMU need offer hooks to implement this.

Another question is context switch. Shadow page tables based on the fact that guest should sync its tlb with shadow page tables so that tlb management instruction will be trapped. But the most common tlb management instruction in context-switch is invalidates the entire tlb. So the shadow page tables need to be synced again. Causes bad performance when vm runs multi processes.

vMMU is implement in order to improve guest performance which caches all page tables during context switch. This means context swtich could find its cache from vMMU directly, invdalidates tlb has no influence on context-switch.